Ciclo di Conferenze dei corsi di Laurea in Fisica del Dipartimento di Matematica e Fisica

Edizione 2016

Marco Barbieri

Marco Barbieri

Dipartimento di Scienze, Università Roma Tre

A scanner darkly – Generalised measurements in quantum information

Link identifier #identifier__5052-1Locandina – 12 gennaio 2016, ore 15:00 Aula B

After a century of solid training in quantum mechanics, we are now well aware that the act of measurement is not harmless and passive inspection, but it is quite intrusive to the evolution of the system we want to observe. Still, there exist the possibility that we can simply take a glance at a system and reduce the perturbation we induce. There is yet a price to pay in terms of the information we get with these generalised measurements. In this talk, we will first review the concepts and the methods behind such generalised, weak measurements, and then move on to discussing their application to quantum technologies – with a particular attention to communications and metrology. We will discuss how they can be implemented in a laboratory, what advantages they might (or might not!) provide over standard measurement schemes, and highlight how their results are at odds with a simple, intuitive interpretation of quantum phenomena.

Angelo De Santis

Angelo De Santis

INGV, Roma, Italy

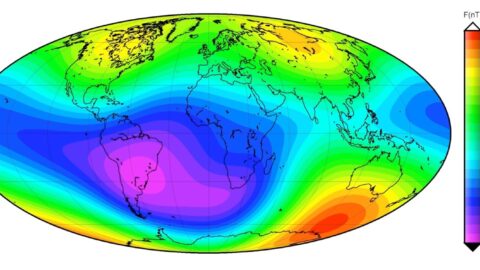

Geosystemics as a Systemic View of the Earth’s Magnetic Field: clues for an Imminent Geomagnetic Transition

Link identifier #identifier__149815-2Locandina – 2 febbraio 2016, ore 15:00 Aula B

Geosystemics studies the Earth in its wholeness to look for eventual couplings among the subsystems composing our planet, and investigates whether the process of concern persists in its typical (present) state or is approaching a dynamical change. This presentation will provide this view for the Earth’s magnetic field, reviewing most of the results obtained in our recent works. The main tools used by geosystemics are some nonlinear quantities, such as some kinds of entropy.

Through them, it is possible to: (a) establish the chaoticity and ergodicity of the recent geomagnetic field in a direct and simple way; and (b) indentify the most extreme events in its history, as the most rapid and the slowest ones, i.e., jerks and polarity changes (reversals or excursions). In particular, regarding the latter phenomena, with the help of these entropic concepts and together with the use of the theory of critical transitions, some clues can be given for a possible imminent change of the geomagnetic field dynamical regime.

Susanna Corti

Susanna Corti

ISAC-CNR, Bologna, Italy

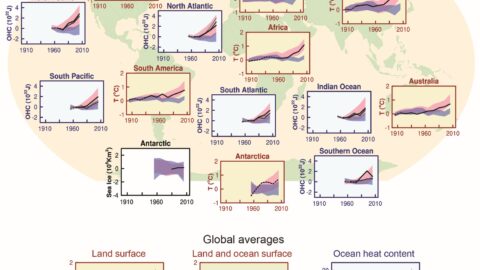

On the Reliability of Multi-Year Forecasts of Climate

Link identifier #identifier__23413-3Locandina – 8 marzo 2016, ore 15:00 Aula B

Modelling climate is currently one of the most challenging problems in science and yet also one of the most urgent problems for the future of society. Thanks to the Intergovernmental Panel on Climate Change (IPCC), there is vast literature on projections of climate change and its most recent assessment shows a large range in the projected global warming, even for identical external forcing. We do not know exactly, and there is no simple way to find out, what proportion of these differences is due to deficiencies in model physics and model resolution, and what part is due to the intrinsic chaotic nature of the coupled climate system. However, it is essential for the development of the Global Framework for Climate Services, that climate simulations are realistic and climate forecast is reliable. In this lecture it will be shown that model resolution plays a fundamental role for the correct representation of the non-Gaussian probability distribution associated with the climatology of quasi-persistent weather regimes. A global atmospheric model with horizontal resolution typical of that used in operational numerical weather prediction is able to simulate with remarkable accuracy the spatial patterns of Euro-Atlantic weather regimes. By contrast, the same model, integrated at a resolution more typical of current climate models, shows no statistically significant evidence of such non-gaussian regime structures, and the spatial structure of the corresponding regime are not accurate. The correct simulation of such regimes has wide implications in the climate system. There is evidence that in a dynamical system with regime structure, the time-mean response of the system to some imposed forcing, (which here could be thought of as enhanced greenhouse gas concentration), is in part determined by the change in frequency of occurrence of the naturally occurring regimes. As such, a model, which fails to simulate observed regime structures well, could qualitatively fail to simulate the correct response to this imposed forcing. The issue of reliability of decadal predictions (“the fascinating baby that all wish to talk about”) is addressed as well. Such predictions must be probabilistic, arising from inevitable uncertainties, firstly in knowledge of the initial state, secondly in the computational representation of the underlying equations of motion, and thirdly in the so-called “forcing” terms, which include not only greenhouse gas concentrations, but also volcanic and other aerosols. If such predictions could be shown to be reliable when predicting non-climatological probabilities, there can be little doubt about their utility across a range of application sectors. However, has the science of decadal forecasting advanced to the stage that potential users could indeed rely on specific multi-year predictions? Some results from CMIP5 and ECMWF decadal predictions datasets will be shown in the attempt to answer to this question.

Michela Marafini

Michela Marafini

Centro Fermi e INFN Roma 1

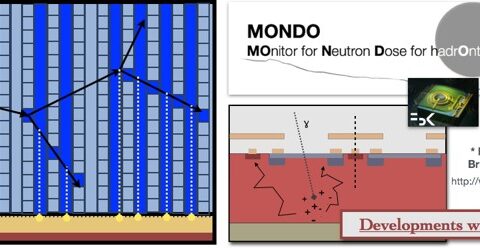

Secondary neutrons in Particle Therapy: the MONDO Project

Link identifier #identifier__100632-4Locandina – 12 aprile 2016, ore 15:00 Aula B

In Charged Particle Therapy, cancer treatments are performed using accelerated charged particles whose high irradiation precision and conformity allows the tumor destruction while sparing the surrounding healthy tissues. Dose release monitoring devices using photons and charged particles produced by the beam interaction with the patient body have already been proposed, but no attempt based on the detection of the abundant secondary radiation neutron component has been made yet. The reduced attenuation length of neutrons yields a secondary particle sample that is larger in number when compared to photons and charged particles. Furthermore, neutrons allow for a backtracking of the emission point that is not aected by multiple scattering. Since neutrons can release a signicant dose far away from the tumor region, a precise measurement of their ux, production energy and angle distributions is eagerly needed in order to improve the Treatment Planning Systems (TPS) software, so to properly take into account not only the normal tissue toxicity in the target region, but also the risk of late complications in the whole body. The technical challenges posed by a neutron detector aiming for high detection eciency and good backtracking precision will be addressed within the MONDO (MOnitor for Neutron Dose in hadrOntherapy) project. The MONDO main goal is to develop a tracking detector targeting fast and ultrafast secondary neutrons. The neutron tracking principle is based on the reconstruction of two consequent elastic scattering interactions of a neutron with a target material. The full reconstruction of the protons produced in elastic interactions with the detector material will be used to measure energy and direction of the impinging neutron. The tracker is then composed by a matrix (10 10 20 cm3) of squared scintillating bers 250 m side. The bers are used at the same time as target for the elastic n-p scattering of the impinging neutrons and as active detector for the recoiling protons. The number of photons produced by the protons that undergo an elastic scattering with the neutrons and interact with the detector bers is extremely small and requires a novel technology capable of high single photon detection eciency or high light amplication capabilities. The chosen layout solution will implement the recent CMOS SPAD Array technology (www.spadnet.eu) aiming for single photon detection. The neutron tracker will measure the neutron production yields, as a function of production angle and energy. The detector performances will be optimized using a detailed MC simulation

Enrico Costa

Enrico Costa

IAPS-INAF

Toward a new window in Astrophysics: X-ray Polarimetry

Link identifier #identifier__34433-5Locandina – 10 maggio 2016, ore 15:00 Aula B

Polarimetry is an almost unexplored window of X-Ray Astronomy

The poor sensitivity of traditional techniques (Bragg diffraction at 45° and Thomson scattering around 90°) resulted in a poor output of pioneering experiments in the ‘70s, polarimetry was not included in all the following missions. The development of a technique based on the imaging of tracks of photoelectrons absorbed in a gas made feasible a new approach based on an X-ray optics and an imaging detector in the focus. Such a telescope allows to measure simultaneously the position, the time, the energy of the photon and the ejection angle of the photoelectron. From the angular distribution the linear polarization is derived. The whole results in a high sensitivity imaging, timing and spectroscopy polarimeter. XIPE (X-Ray Imaging polarimetry explorer) is one of the three missions competing as finalist for the Fourth mission of Medium Size of the European Space Agency. It is based on three grazing incidence telescopes with three polarmeters in the focus. It can allow for a dramatic step forward in sensitivity disclosing this window of High Energy Astrophysics. From the literature almost all fields of High Energy Astronomy will benefit of polarimetric measurements. XIPE will be an observatory open to the community worldwide, capable perform measurements of high significance in a sample from 100 to 200 sources.

I give some hint of the most interesting hot topics to be studied and solved with XIPE.

- How particles are accelerated in shocks in Pulsar Wind Nebulae and in Shell-like SuperNova Remnants

- Which is the plasma and magnetic field structure in accretion driven binary pulsars

- Which is the effect of QED in extreme magnetic fields of Magnetars

- Which is the spin of Black Holes in galactic binaries

- Which was the activity of the Black Hole at the center of our Galaxy a few hundred years ago

- Which physical mechanisms are active in blazars and which is the source of comptonized photons

- Which evidences can polarimetry contribute to the search for effects of Quantum Gravity and of photon-axion oscillation in inter-cluster magnetic fields.

Andrea Romanino

Andrea Romanino

SISSA/ISAS, Trieste

The LHC challenge and the 750 GeV diphoton excess

Link identifier #identifier__178889-6Locandina – 7 giugno 2016, ore 15:00 Aula B

I will discuss the challenge posed by the LHC results to the theoretical expectations on what might have been found. In particular, I will focus i) on the lack (so far) of experimental support for popular scenarios for TeV physics and ii) on the possible theoretical interpretation of the diphoton excess at 750 GeV.

Giuseppe Falci

Giuseppe Falci

Università degli Studi di Catania

1/f noise: Implications for solid-state quantum information

Link identifier #identifier__57025-7Locandina – 8 novembre 2016, ore 15:00 Aula B

The efficiency of the future devices for quantum information processing is limited mostly by the finite decoherence rates of the individual qubits and quantum gates. Recently, substantial progress was achieved in enhancing the time within which a solid-state qubit demonstrates coherent dynamics. This progress is based mostly on a successful isolation of the qubits from external decoherence sources obtained by engineering. Under these conditions, the material-inherent sources of noise start to play a crucial role. In most cases, quantum devices are affected by noise decreasing with frequency f approximately as 1/f. According to the present point of view, such noise is due to material- and device-specific microscopic degrees of freedom interacting with quantum variables of the nanodevice. The simplest picture is that the environment that destroys the phase coherence of the device can be thought of as a system of two-state fluctuators, which experience random hops between their states. If the hopping times are distributed in an exponentially broad domain, the resulting fluctuations have a spectrum close to 1/f in a large frequency range. We discuss the current state of the theory of decoherence due to 1/f noise, basic mechanisms of such noises in various nanodevices are models describing the interaction of the noise sources with quantum devices. The main focus is to analyze how the 1/f noise destroys their coherentoperation, starting from is individual qubits based on super-conductor circuits, and discuss strategies for minimizing the noise-induced decoherence.